By now, through coding with LLMs, you’ve probably seen one say something like ‘You're absolutely right!’

This usually comes when you’ve pointed out an error the LLM has made, and serves as a useful reminder that you’re still just talking to a machine. (For this reason, I advise against prompting it not to say this anymore).

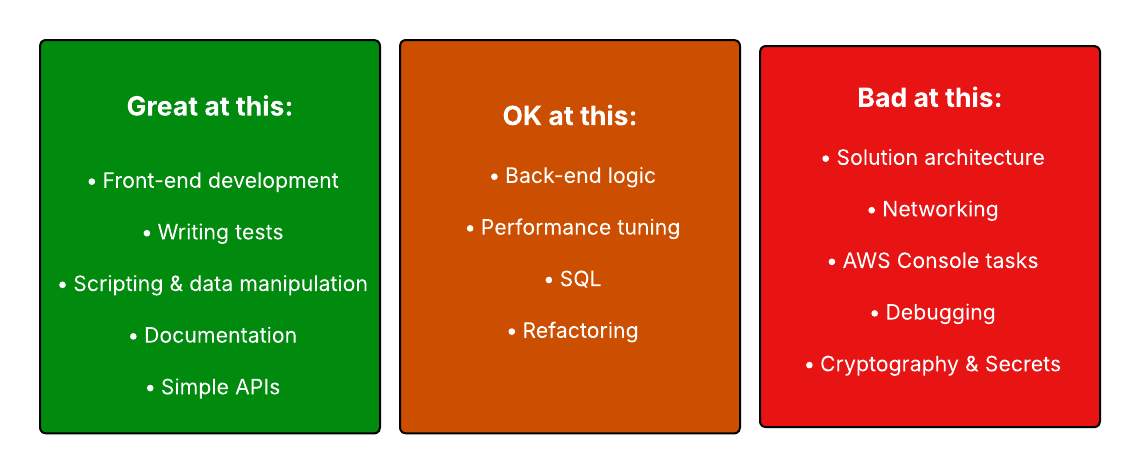

LLMs are transforming how software gets written, but not all code is created equal, and neither is all context. Some dev tasks are a perfect fit for Claude Code. Others? Not so much.

If you’ve used LLMs, particularly Claude Code, in a context engineering environment, you know that speed is often achieved at the cost of spaghetti code, extraneous logic and simplified decision-making.

Choosing what LLM output to push straight through to production, what to triage and what to take with a pinch of salt is most definitely an acquired skill.

This issue will help you get sharper about what to delegate, what to double-check, and what to never trust the model with at all.

What LLMs Are Great At

These tasks have a clear structure, strong patterns, and minimal ambiguity. Claude excels here:

Front-end development

LLMs scaffold components, write React logic, style with Tailwind with surprising fluency.Writing tests

Claude is great at inferring unit and integration tests based on function names or code.Scripting & data manipulation

Shell scripts, Python CLI tools, CSV cleaning: basically anything small, repeatable, and task-scoped.Documentation

Markdown comments, API docs,READMEs, especially when it can read the code first.Simple APIs

For CRUD endpoints, FastAPI routes, lightweight Express apps, Claude handles the boilerplate well.

What LLMs Are Just OK At

These tasks are doable, but require a sharp eye. Expect useful drafts, not final answers.

Back-end logic

Claude can suggest patterns and structure, but it won't catch deep domain edge cases.Performance tuning

May offer plausible advice, but rarely context-aware or benchmark-driven.SQL

Good at basic queries and joins, but not always performant or semantically correct for complex data models.Refactoring

Fine for renaming, flattening, reorganising but risky if logic is nuanced or tightly coupled.

What Needs Rigorous Double-Checking

These areas are risky because Claude lacks the context, stakes are high, or both:

Solution architecture

Models don’t weigh trade-offs or understand business constraints. They're good at patterns, not decisions.Networking

Protocols, ports, latency, firewalls: Claude often oversimplifies or misrepresents how systems interact.AWS Console tasks

The documentation is often outdated, features change frequently, and Claude guesses with confidence.Debugging

Claude doesn’t have your stack trace, logs, or real-time system state. It's working blind.Cryptography & Secrets

Never trust an LLM to invent or implement crypto. Never expose secrets. Ever.

Why LLM Performance Varies So Much

If you’ve noticed that Claude sometimes feels like a senior engineer and sometimes like an intern guessing in the dark, you’re not alone.

Here’s why that happens:

1. Some Tasks Are Inherently Harder

LLMs excel at narrow, repeatable, language-based tasks. The moment you ask for cross-cutting logic, architectural trade-offs, or design decisions, they struggle because those things require real reasoning, not pattern recognition.

2. Claude Doesn’t Know What It Can’t See

LLMs inherently have a lot of unknown unknowns. Claude Code can access your current codebase. But it can’t see:

Linked repos

Org-wide policies

Cloud configuration

Uncommitted changes

What your team agreed to last sprint

Your hard-earned experience about what really works in prod

If that external context matters, Claude won’t (and can’t) guess it correctly.

3. When in Doubt, It Hallucinates

Claude is confident but not cautious.

When it encounters ambiguity or missing context, it fills in the gaps based on probability, not accuracy. That’s fine for placeholder text, not fine for security tokens or IAM policy advice.

How to Get Better Output from Claude

Even when Claude doesn't have the full picture, you can still improve its reliability dramatically:

1. Be Explicit About What’s Missing

If your request depends on an external system, tell the model.

Bad:

“Update the login flow to use our new auth provider.”

Better:

“Replace AuthLegacy with the AuthX provider. AuthX is in a separate repo. Preserve existing MFA fallback logic.”

If it’s out-of-scope, name it. Claude won’t invent what you’ve clearly marked as unknown.

2. Use Markdown to Anchor Expectations

Claude pays close attention to structured .md files. Use that to your advantage:

## You are

- A backend engineer working in a microservices environment.

- You can only see this codebase.

## Constraints

- Don’t invent details about external services.

- If something’s missing, add a TODO comment.

This kind of context doesn’t just shape the model’s tone—it shapes its behaviour.

3. Ask Claude to Admit Uncertainty

You can encourage Claude to flag what it doesn’t know:

“If anything is unclear or out of scope, include a TODO: comment instead of guessing.”

This invites the model to pause where a junior developer would—and that’s a good thing.

The Real Lesson

LLMs aren’t broken. They’re just incomplete. They know what you show them, not what you assume they know.

Claude Code is an excellent teammate as long as you remember who’s responsible for the output. It’s you, bro.

Use it for speed.

Build guardrails for safety.

And always design your context like an engineer.